Two ways to import an XML file with .Net Core or .Net Framework

It's always the simple stuff you forget how you do. For years I've mainly been working with JSON files, so when faced with that task of reading an XML file my brain went "I can do that" followed by "actually how did I used to do that?".

So here's two different methods. They work on .Net Core and theoretically .Net Framework (my project is .Net Core and haven't checked that they do actually work on framework).

My examples are using an XML in the following format:

1<?xml version="1.0" encoding="utf-8"?>2<jobs>3 <job>4 <company>Construction Co</company>5 <sector>Construction</sector>6 <salary>£50,000 - £60,000</salary>7 <active>true</active>8 <title>Recruitment Consultant - Construction Management</title>9 </job>10 <job>11 <company>Medical Co</company>12 <sector>Healthcare</sector>13 <salary>£60,000 - £70,000</salary>14 <active>false</active>15 <title>Junior Doctor</title>16 </job>17</jobs>

Method 1: Reading an XML file as a dynamic object

The first method is to load the XML file into a dynamic object. This is cheating slightly by first using Json Convert to convert the XML document into a JSON string and then deserializing that into a dynamic object.

1using Newtonsoft.Json;2using System;3using System.Collections.Generic;4using System.Dynamic;5using System.IO;6using System.Text;7using System.Xml;8using System.Xml.Linq;910namespace XMLExportExample11{12 class Program13 {14 static void Main(string[] args)15 {16 string jobsxml = "<?xml version=\"1.0\" encoding=\"utf-8\"?><jobs> <job><company>Construction Co</company><sector>Construction</sector><salary>£50,000 - £60,000</salary><active>true</active><title>Recruitment Consultant - Construction Management</title></job><job><company>Medical Co</company><sector>Healthcare</sector><salary>£60,000 - £70,000</salary><active>false</active><title>Junior Doctor</title></job></jobs>";1718 byte[] byteArray = Encoding.UTF8.GetBytes(jobsxml);19 MemoryStream stream = new MemoryStream(byteArray);20 XDocument xdoc = XDocument.Load(stream);2122 string jsonText = JsonConvert.SerializeXNode(xdoc);23 dynamic dyn = JsonConvert.DeserializeObject<ExpandoObject>(jsonText);2425 foreach (dynamic job in dyn.jobs.job)26 {27 string company;28 if (IsPropertyExist(job, "company"))29 company = job.company;3031 string sector;32 if (IsPropertyExist(job, "sector"))33 company = job.sector;3435 string salary;36 if (IsPropertyExist(job, "salary"))37 company = job.salary;3839 string active;40 if (IsPropertyExist(job, "active"))41 company = job.active;4243 string title;44 if (IsPropertyExist(job, "title"))45 company = job.title;4647 // A property that doesn't exist48 string foop;49 if (IsPropertyExist(job, "foop"))50 foop = job.foop;51 }5253 Console.ReadLine();54 }5556 public static bool IsPropertyExist(dynamic settings, string name)57 {58 if (settings is ExpandoObject)59 return ((IDictionary<string, object>)settings).ContainsKey(name);6061 return settings.GetType().GetProperty(name) != null;62 }63 }64}65

A foreach loop then goes through each of the jobs, and a helper function IsPropertyExist checks for the existence of a value before trying to read it.

Method 2: Deserializing with XmlSerializer

My second approach is to turn the XML file into classes and then deserialize the XML file into it.

This approch requires more code, but most of it can be auto generated by visual studio for us, and we end up with strongly typed objects.

Creating the XML classes from XML

To create the classes for the XML structure:

1. Create a new class file and remove the class that gets created. i.e. Your just left with this

1using System;2using System.Collections.Generic;3using System.Text;45namespace XMLExportExample6{78}

2. Copy the content of the XML file to your clipboard

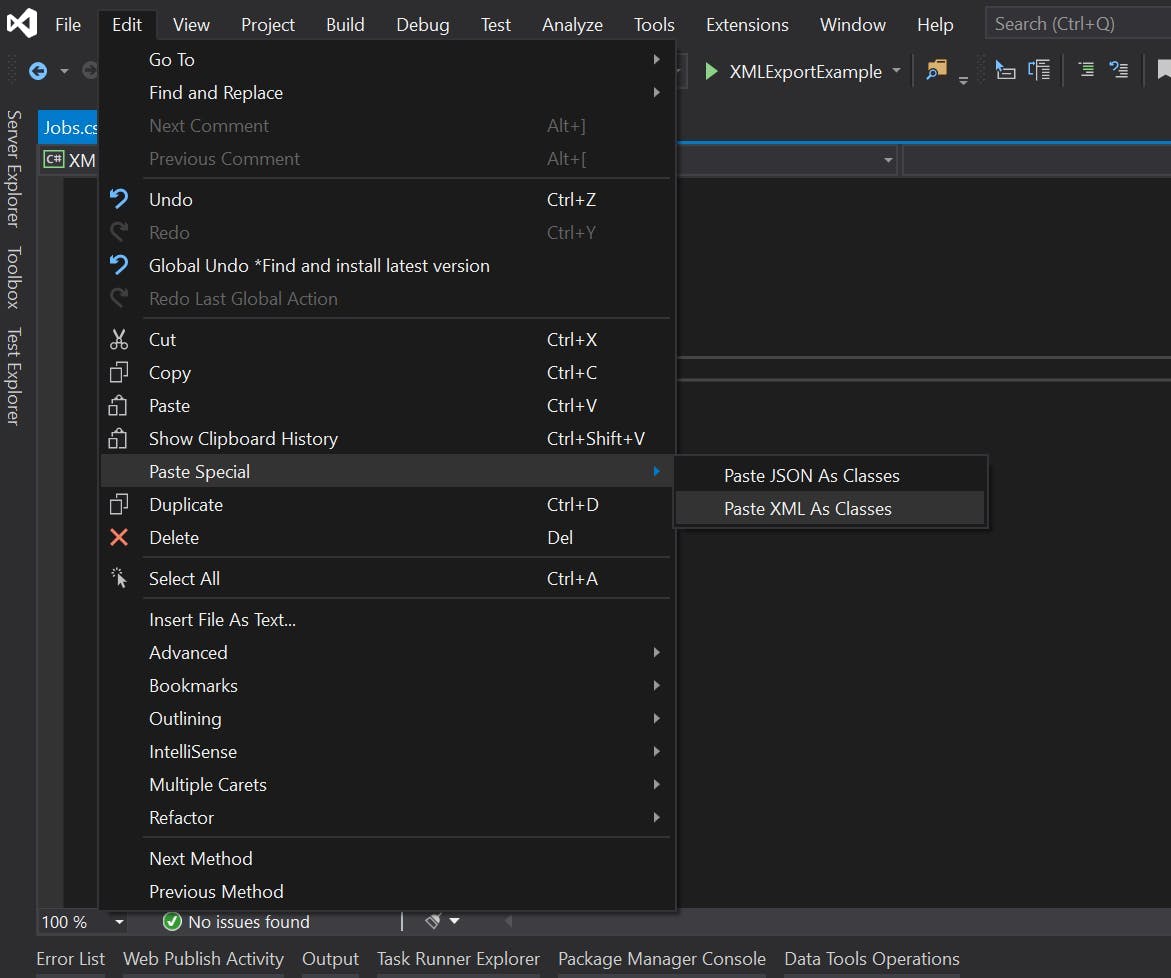

3. Select the position in the file you want to the classes to go and then go to Edit > Paste Special > Paste XML as Classes

If your using my XML you will now have a class file that looks like this:

1using System;2using System.Collections.Generic;3using System.Text;45namespace XMLExportExample6{78 // NOTE: Generated code may require at least .NET Framework 4.5 or .NET Core/Standard 2.0.9 /// <remarks/>10 [System.SerializableAttribute()]11 [System.ComponentModel.DesignerCategoryAttribute("code")]12 [System.Xml.Serialization.XmlTypeAttribute(AnonymousType = true)]13 [System.Xml.Serialization.XmlRootAttribute(Namespace = "", IsNullable = false)]14 public partial class jobs15 {1617 private jobsJob[] jobField;1819 /// <remarks/>20 [System.Xml.Serialization.XmlElementAttribute("job")]21 public jobsJob[] job22 {23 get24 {25 return this.jobField;26 }27 set28 {29 this.jobField = value;30 }31 }32 }3334 /// <remarks/>35 [System.SerializableAttribute()]36 [System.ComponentModel.DesignerCategoryAttribute("code")]37 [System.Xml.Serialization.XmlTypeAttribute(AnonymousType = true)]38 public partial class jobsJob39 {4041 private string companyField;4243 private string sectorField;4445 private string salaryField;4647 private bool activeField;4849 private string titleField;5051 /// <remarks/>52 public string company53 {54 get55 {56 return this.companyField;57 }58 set59 {60 this.companyField = value;61 }62 }6364 /// <remarks/>65 public string sector66 {67 get68 {69 return this.sectorField;70 }71 set72 {73 this.sectorField = value;74 }75 }7677 /// <remarks/>78 public string salary79 {80 get81 {82 return this.salaryField;83 }84 set85 {86 this.salaryField = value;87 }88 }8990 /// <remarks/>91 public bool active92 {93 get94 {95 return this.activeField;96 }97 set98 {99 this.activeField = value;100 }101 }102103 /// <remarks/>104 public string title105 {106 get107 {108 return this.titleField;109 }110 set111 {112 this.titleField = value;113 }114 }115 }116117}

Notice that the active field was even picked up as being a bool.

Doing the Deserialization

To do the deserialization, first create an instance of XmlSerializer for the type of the object we want to deserialize too. In my case this is jobs.

1 var s = new System.Xml.Serialization.XmlSerializer(typeof(jobs));

Then call Deserialize passing in a XML Reader. I'm creating and XML reader on the stream I used in the dynamic example.

1 jobs o = (jobs)s.Deserialize(XmlReader.Create(stream));

The complete file now looks like this:

1using System;2using System.IO;3using System.Text;4using System.Xml;56namespace XMLExportExample7{8 class Program9 {10 static void Main(string[] args)11 {12 string jobsxml = "<?xml version=\"1.0\" encoding=\"utf-8\"?><jobs> <job><company>Construction Co</company><sector>Construction</sector><salary>£50,000 - £60,000</salary><active>true</active><title>Recruitment Consultant - Construction Management</title></job><job><company>Medical Co</company><sector>Healthcare</sector><salary>£60,000 - £70,000</salary><active>false</active><title>Junior Doctor</title></job></jobs>";1314 byte[] byteArray = Encoding.UTF8.GetBytes(jobsxml);15 MemoryStream stream = new MemoryStream(byteArray);1617 var s = new System.Xml.Serialization.XmlSerializer(typeof(jobs));18 jobs o = (jobs)s.Deserialize(XmlReader.Create(stream));1920 Console.ReadLine();21 }22 }23}

And thats it. Any missing nodes in your XML will just be blank rather than causing an error.