When your working on a new website build as either the marketer responsible for the site or the project manager overseeing it’s build, some of the most frustrating tasks to be using up your budget can originate from the IT team and come under the category of setup.

They can suck days even weeks from a project but offer no visible features or benefit to the end user, worse still they often need to come at the start of a project which for people wanting to see a design turn into a webpage just feels like a delay and lack of progress.

Manual Deployments

So, what are automated deployments and why are they worth it? Well first let’s examine what a deployment is to begin with.

When a change or new feature is ready to be built for a site, it will have been turned into a specification and handed over to a development team to build. The developers will then write some code and test it on their local machines, they may even demo it to someone else. At some point though, that work needs to get from their machine to the server(s) it runs on so that other people like QA can see it without using the devs machine. Typically, there will be 3 server environments set up for a website:

- Dev / Test – An environment used by the developers and QA to build, test and refactor new features and fixes.

- UAT / Staging – An environment used by the website owners / stakeholders that the features have been deployed to for review and approval before they are deployed into production.

- Production – The live website that is accessible over the internet.

In a manual deployment setup, the developer is responsible for copying the website into each of the environments. This will generally involve:

- Getting the version of code to be deployed from source control.

- Publishing a build of the site locally (some languages need to be compiled into machine code, and most modern websites will run a task on the CSS and JavaScript to obfuscate and reduce the file size).

- Login to databases and run any scripts to update the database.

- Login to the web servers using something like a remote desktop client.

- Copy and Paste the new files onto the server.

- Make some sort of record that the deployment has been done and what it included.

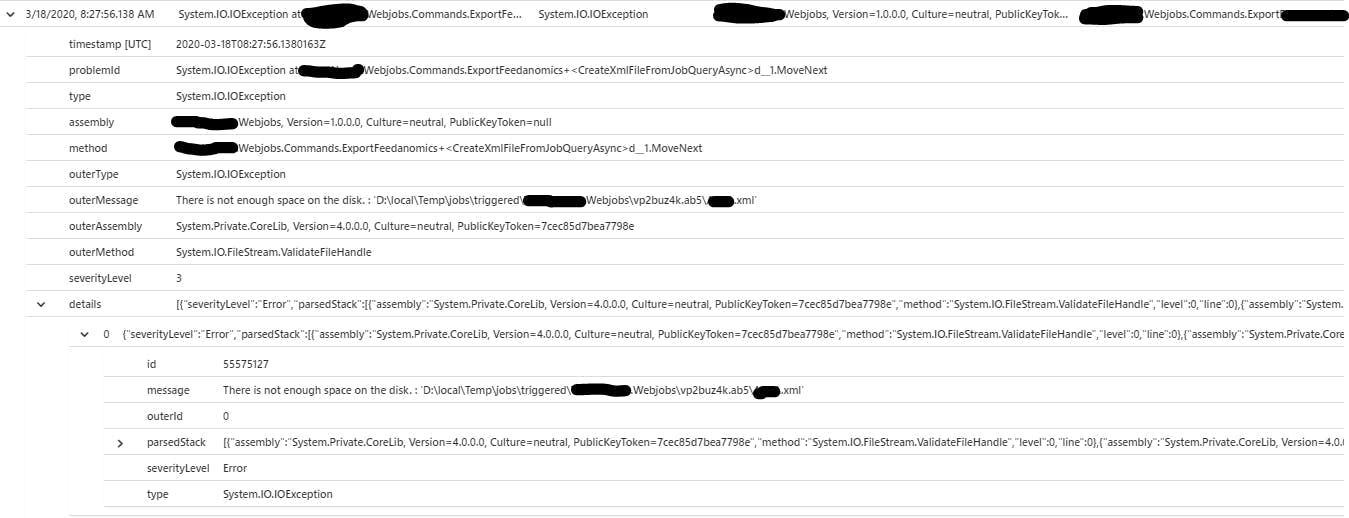

As you can probably guess each of these steps is prone to human error; What if the developer gets the wrong version from source control? What if they overwrite the wrong folder? What if they miss the database changes? What if they don’t record the deployment, how can we be sure what version is on an environment?

Not only that but it’s also a lengthy task for someone to do. Some parts are just waiting for a code publish to complete or a file copy to finish. For the odd production deploy with a decent checklist this might not be so bad, but for iterating work into QA this can not only make QA fails for minor things really costly to fix, but also result in bundling more and more changes together to cut down the number of releases which then reduces the flow of work feeding into QA and slows the pace at which features can be developed and released.

A Better Way

Now we understand the issues, we certainly don’t want that as a cost throughout the lifetime of our site, so how do we avoid it?

Well, automated deployments quite simply take all these manual steps, automated them with a few bonuses on top. By doing this we remove all the elements of human error and are left with consistent results each time.

There’s two parts to an automated pipeline, the first is build and the second is release.

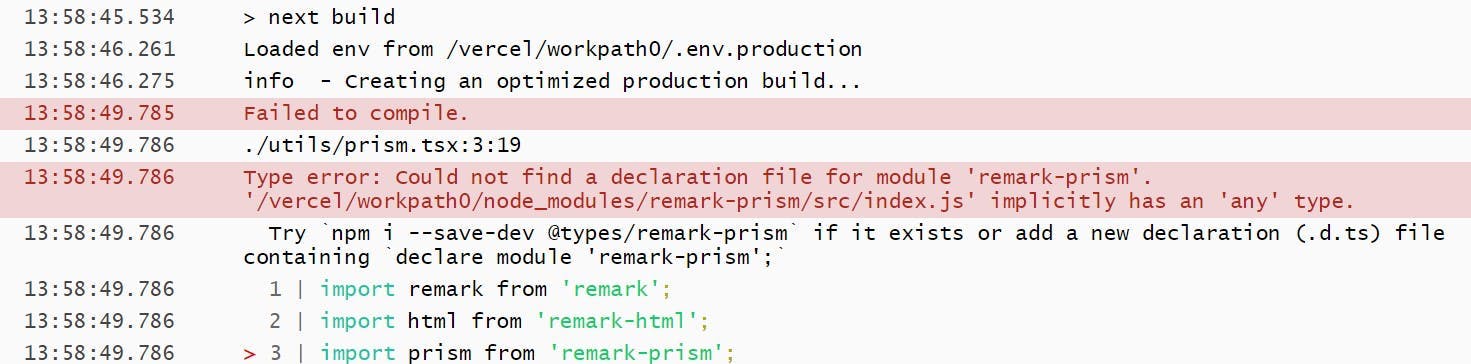

The build part relates to the first 2 steps of our manual process. We need to get the code from source control and then publish it. Once we’ve done this, we are left with a build that we can assign a release number to. By automating it we’ve also ensured that the build happens the same way each time rather than any differences between developers’ machines being. At this point we can also start detecting build failures and include some automated testing to pick up on errors before even involving QA.

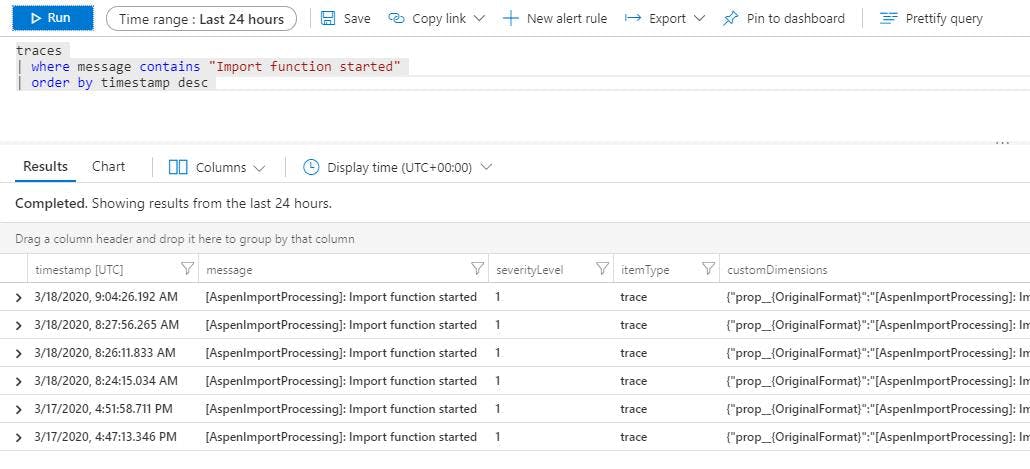

The release part relates to the remaining steps of the manual process. First of all the process of copying files and running scripts now becomes one large script that won’t miss a step and in addition to that, now we have build numbers we can see which build is on each environment and only promote the one we want to the next environment. Because we’re promoting builds rather than doing the whole build process again, we’re also ensuring that the build going to production is the exact same thing that was tested on QA and then reviewed on UAT. With some clever integrations with things like GitHub and Jira we can also automated release notes to say what was included in each of the releases.

Degrees of Automation

It worth noting at this point that there are different degrees of automation and this will greatly affect the cost of setup.

As well as automating the deploy of code, we could also automate the entire server setup through the use or ARM templates in Azure. Different levels of automated testing can be implemented from very low code coverage to very high; a test could even be what the level of code coverage is.

Automation could also extend to include load balancers for green / blue deployments where zero downtime of the site is achieved by using multiple production servers and switching between them while files are being copied.

Is this the CI and CD thing I’ve heard about?

Two terms I deliberately haven’t used in this article is Continuous Integration (CI) and Continuous Delivery (CD) and that’s because although automated deployments forms part of achieving them they are not the same thing and it’s worth knowing how they differ.

Continuous Integration related to the build part of the automated setup and aims to deal with issues arising from multiple developers working on a project. When more than one developer works on a copy of a website locally, both sets are changing in different way to what is contained within source control. The longer this goes on for the bigger the differences get and the harder it will become to merge again. Continuous Integration advocates shortening the gaps between merges to as shorter time as possible, potentially multiple time per day. By having automated tools in place to run a build whenever this happens, issues can be identified as early as possible.

Continuous Delivery relates more to the release aspect of the automated setup and is an aim to be constantly delivering / deploying updates to a solution as fast as possible. By doing this, deployments become trivial and smaller updates are delivered at a much faster pace rather that being queued for one big release. Automation helps make this a reality by removing many of the manual time consuming tasks that are repetitive.