Automated Deployments with Sitecore Managed Cloud

I think it's fair to say automated deployments are a fairly standard practice at this point, long gone are the days when we would remote onto a server and copy some files over.

However when you get your shiny new Sitecore managed cloud environment, unlike other cloud offerings it doesn't come with any automated deployments setup for you. Which to be frank is a bit of a let down. Getting one setup though isn't the hardest thing in the world.

A few requirements we're after:

- Deployments should be a button click, no copying and pasting files.

- Deployments should get promoted through multiple environments. e.g. Test, Staging and Production.

- Deployments should be gated and approved to be released.

- There shouldn't be any downtime during the deployment.

So lets dive in.

Azure Devops

The first thing to note, is that Sitecore Managed Cloud is essentially vanilla Microsoft Azure with Sitecore setup on it. As a developer or devops engineer you get given a regular login to the Azure portal and as far as I've seen there's nothing specifically Sitecore about it.

This means however you would normally deploy other things to Azure will work perfectly well for Sitecore. My preference is to use Azure Devops as being from Microsoft it fits really well into the Azure ecosystem.

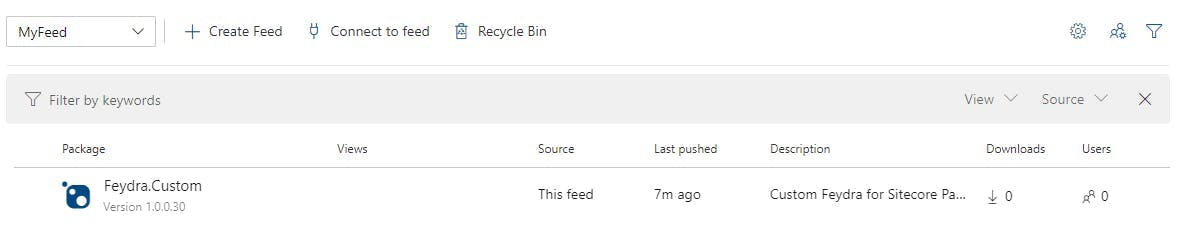

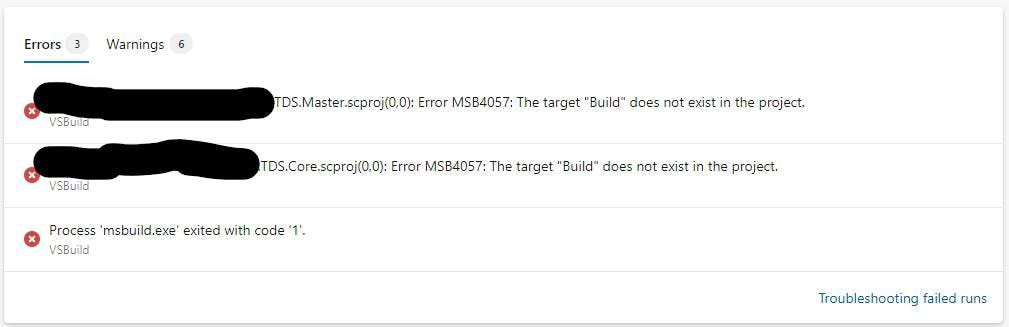

To do this, first off sign up for Azure Devops, get a pipeline created that compiles your solution and outputs it to an artifact. I'm going to skip past all the details on how you do this as it's not specific to managed cloud and at this point lots of people have written articles on how to do it, there's also a high chance your solution is already doing this. If not then go Google it :)

Release Pipeline

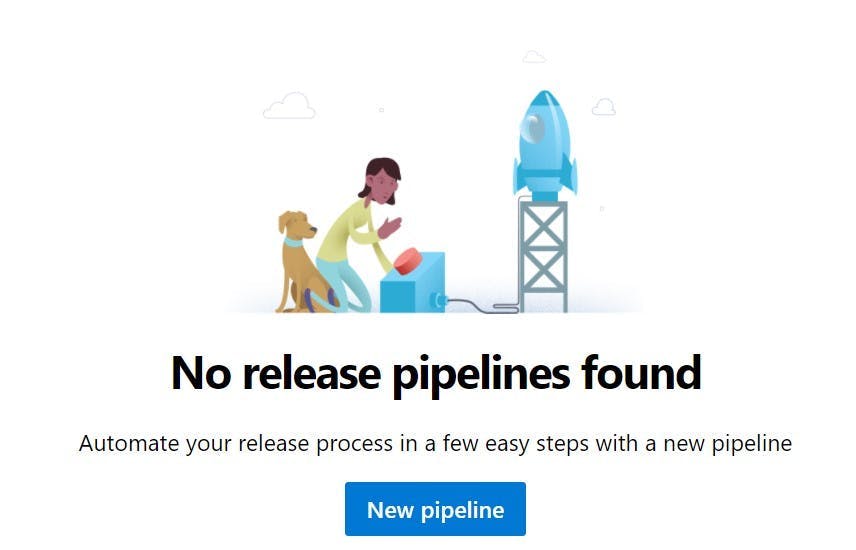

To do a release we need a release pipeline. Unlike build pipelines which control a build and are written in yaml, release pipelines control a release and are created through a UI. With these you can configure the different steps, add gates, configure variables, everything you need for a release.

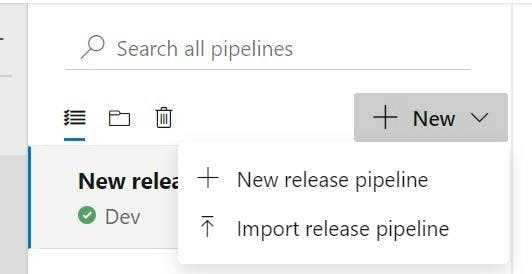

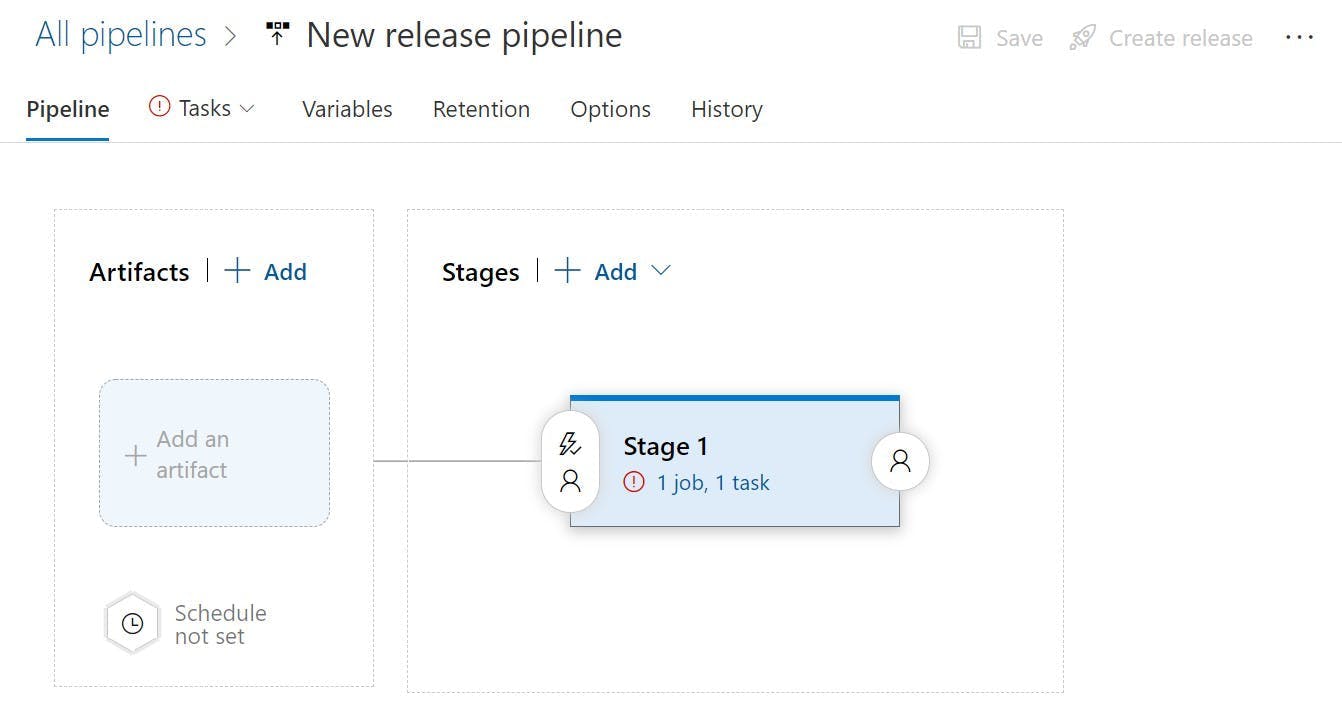

Once you've got a Devops project and your solution compiling, the next step is to create a release pipeline. So head onto the release pipeline section and click the big blue button to make your first pipeline.

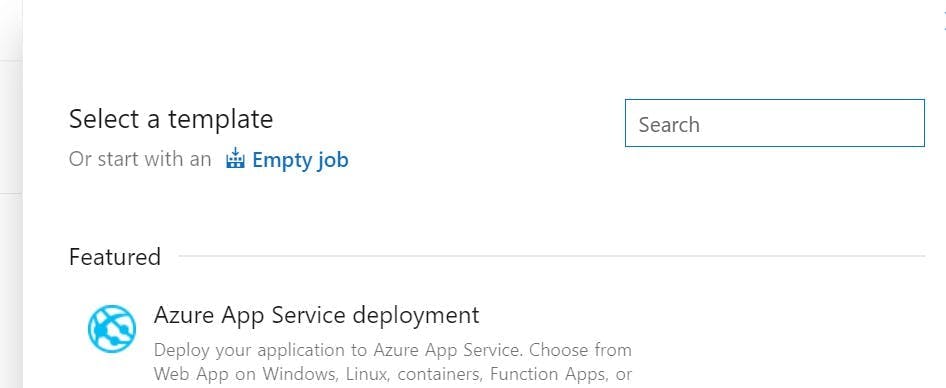

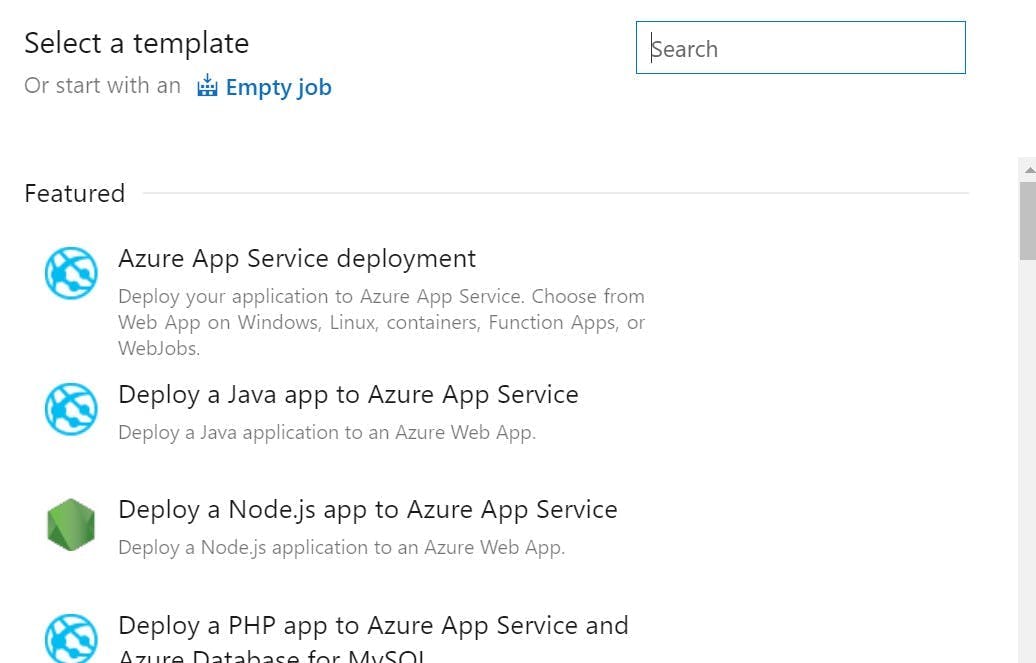

Sitecore Managed Cloud runs Sitecore as Azure App Services, so you will want to pick the Azure App Service deployment template.

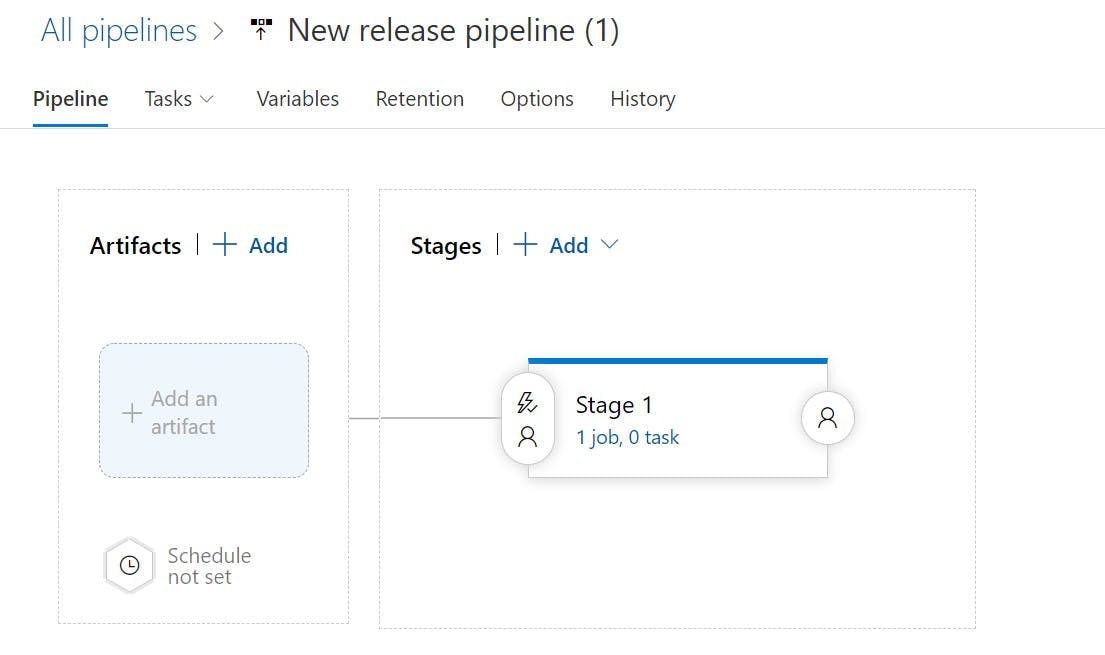

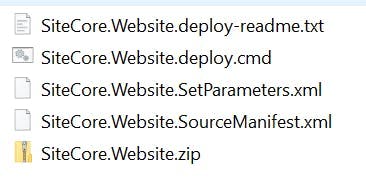

This template will create a stage with a job, but before it can release anything it needs an artifact to deploy.

Click the Add an Artifact button and pick the artifact from your build. On the artifact you can now click a lightening bolt icon to set up continuous deployment triggers. From here you can set the release to run whenever there is a new build on your master / main branch.

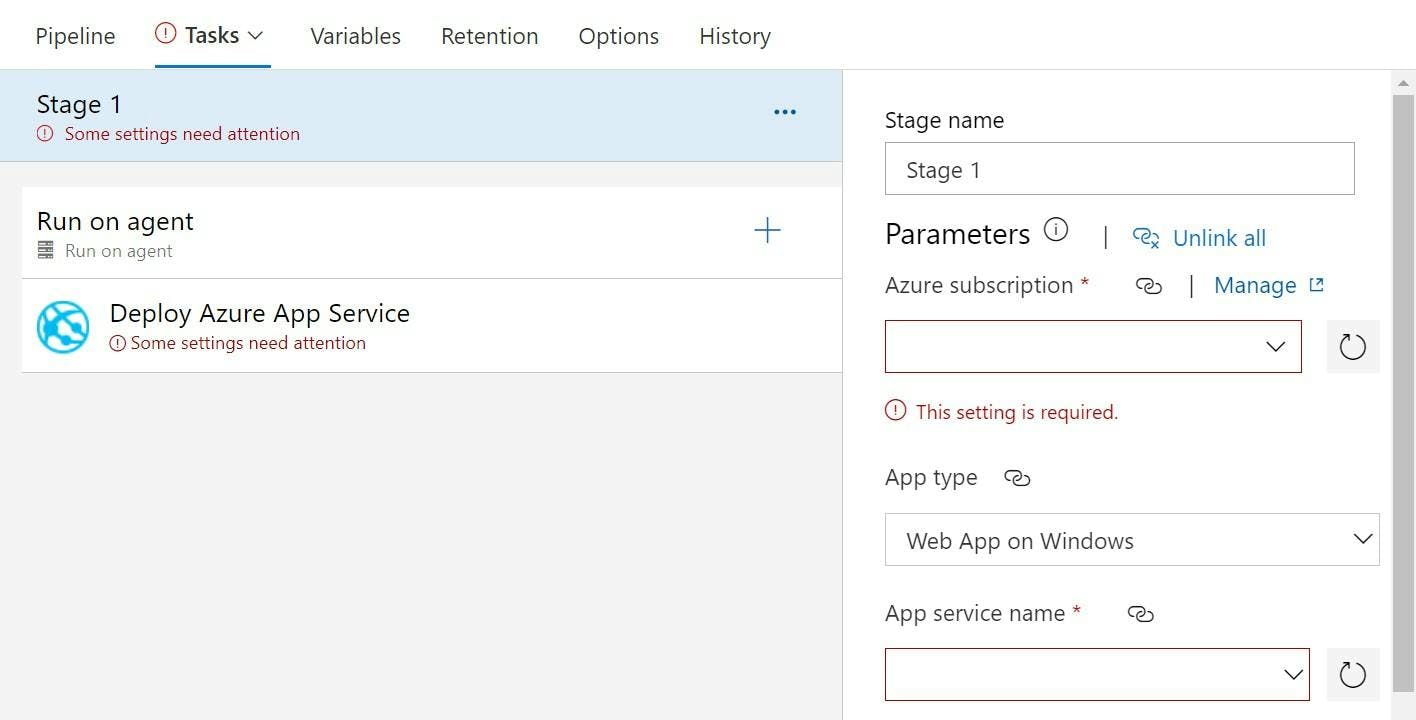

The deployment stage will also need some details completing so click into that next.

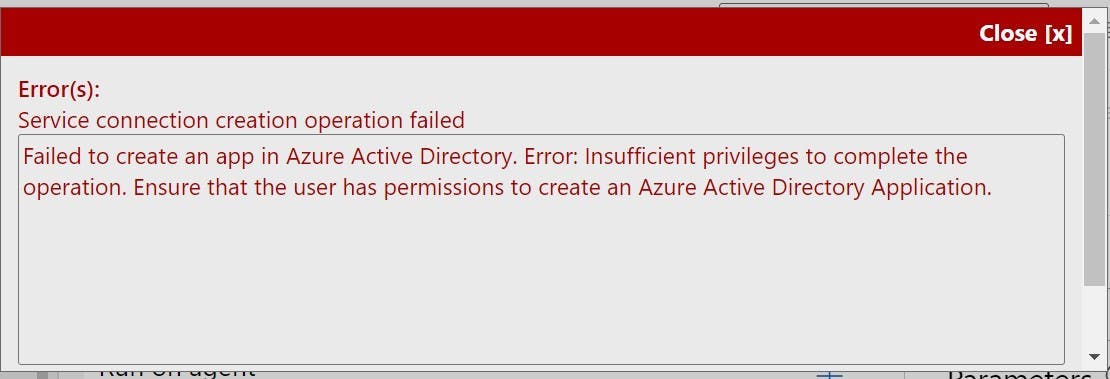

Now when you try to pick the Azure subscription for Sitecore Managed Cloud, you will likely get an error saying Failed to create an app in Azure Active Directory. Error: Insufficient privileges to complete the operation. Ensure that the user has permissions to create an Azure Active Directory Application.

Don't worry, you can't solve this on your own, but Sitecore Support can fix it for you. To get Azure Devops connected to the azure subscription you need a service principal setup. They will know what you mean and send you a service principal id and key.

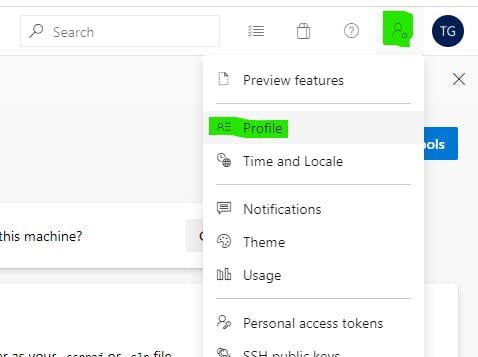

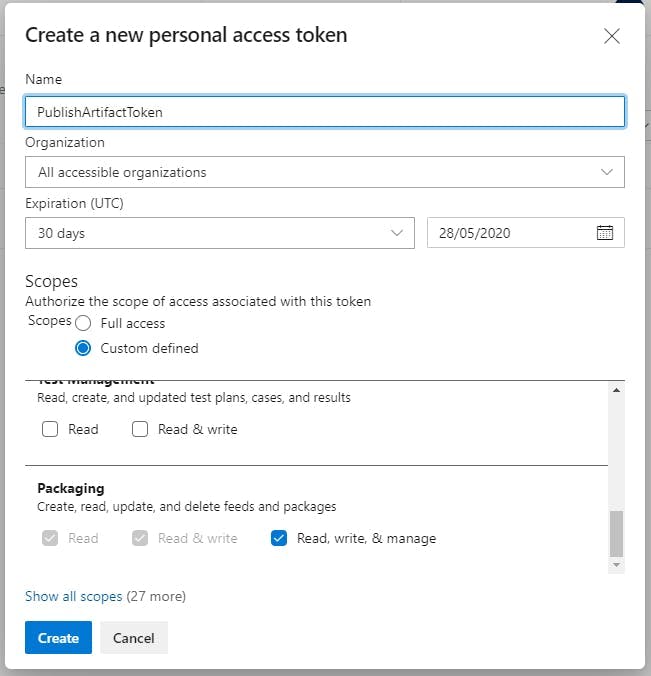

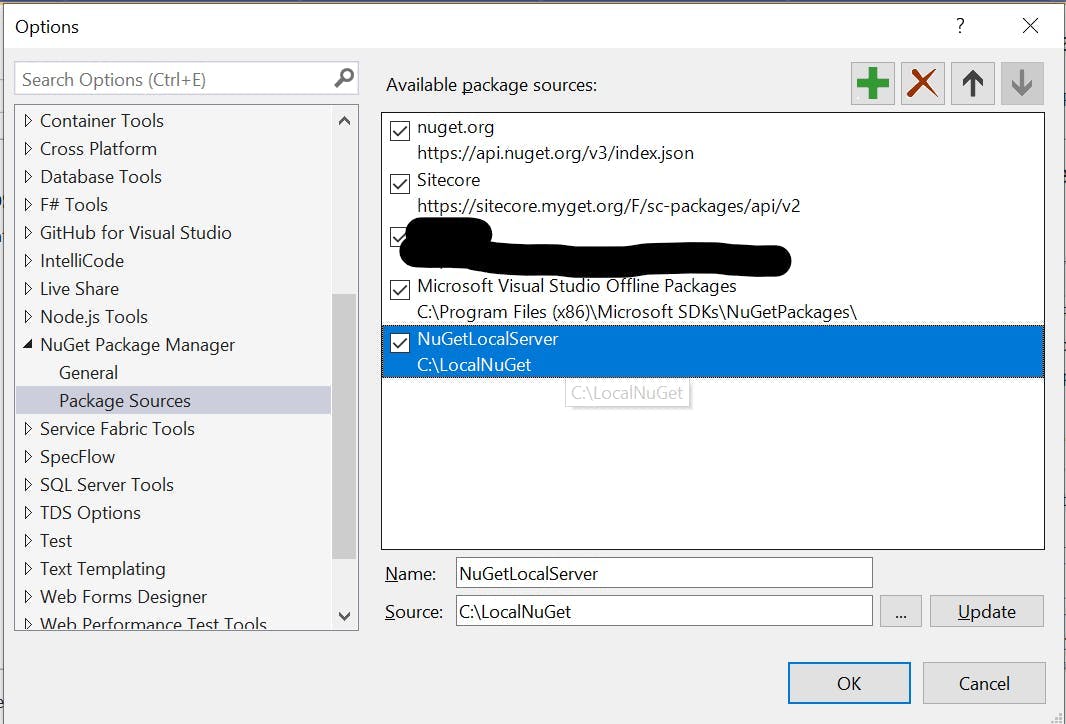

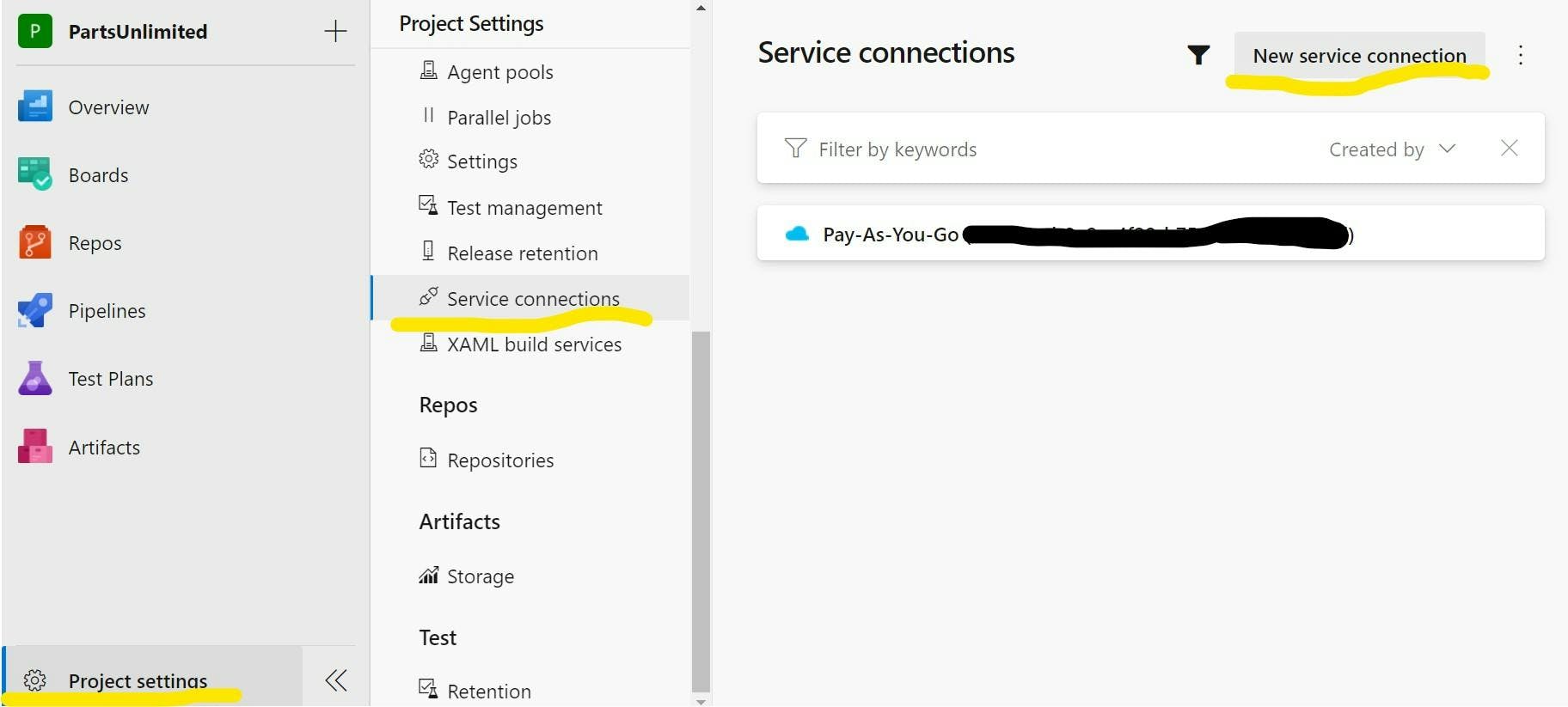

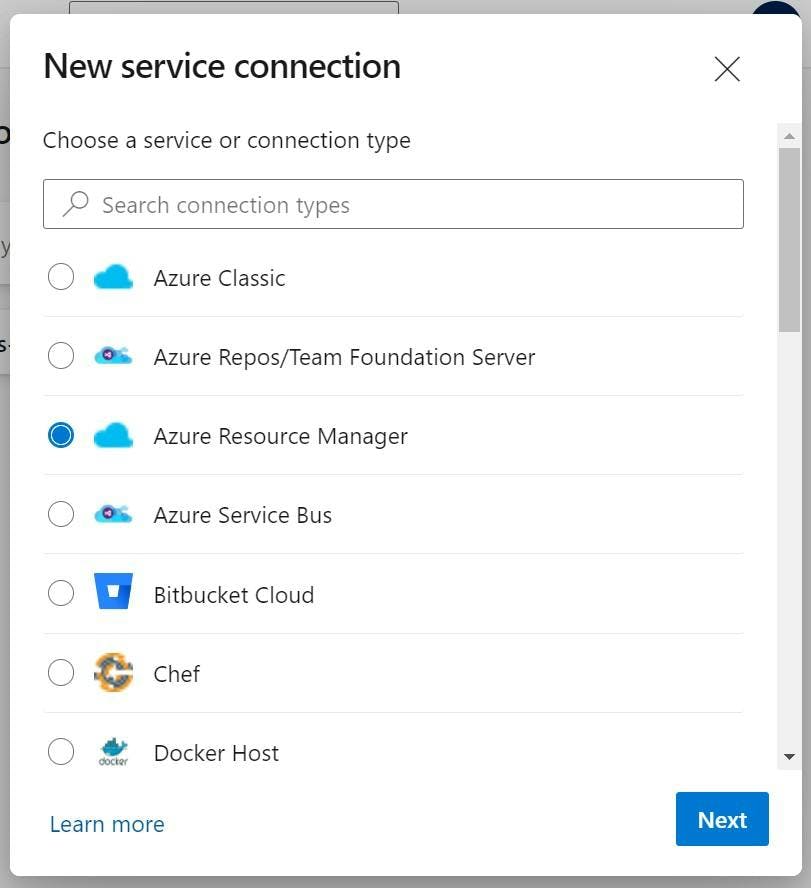

To setup the service principal go to, Project Settings > Service Connections > New Service Connection.

Pick Azure Resource Manager.

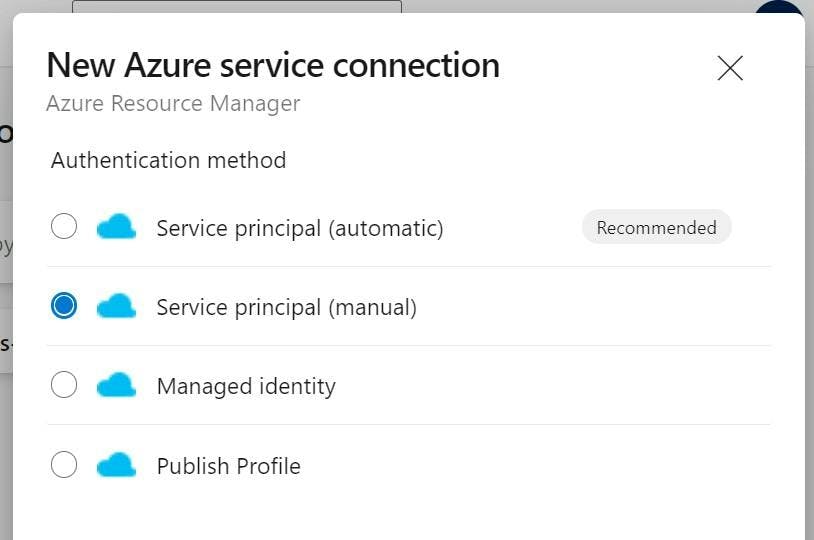

And Service Principal (manual).

Enter the details and you'll have a connection into Sitecore Managed Cloud.

Back in the release pipeline you can now select the Azure subscription and App service name. Run the pipeline and your solution will deploy.

You now have a solution deploying to the Azure App Service. Some things to note:

1. This process does not delete any files already on the target machine. This is intentional as with managed cloud the files have a split responsibility. Sitecore installs Sitecore and manage the SSLs which include config file changes, none of which will be in your source control. You however are doing everything else.

2. Right now whenever you deploy the site will experience downtime as there no concept of green / blue deployments setup.

Blue / Green Deployments

One of the great things about using Azure App Services is all the infrastructure stuff like load balancers, multiple instances etc is done for you. If you want to scale horizontally and add instances, you just move a slider and all the deployments and load balancing is done for you.

If you want to do a blue / green deployment that can be done for you using slots.

Creating an App Service Deployment Slot

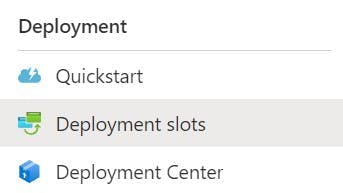

To create a slot navigate to the app service you are deploying to, and select Deployment Slots on the left nav.

Click Add Slot, give it a name. e.g. staging and choose to clone the settings from the existing default slot.

You will now have a second slot that can be deployed to and then swapped to be the live one. When slots are swapped the application is warmed up first meaning no downtime when the slot happens.

However although you copied the settings from the original slot, your staging slot won't have an application on it yet and as your source control won't contain the whole of Sitecore, you will need to copy it from the existing slot.

Connecting to Deployment Slots with FTP

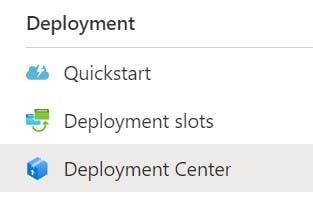

The easiest way to setup the slot with the base files is to copy them with FTP. To get the FTP details select one of the slots from the slots list and then select Deployment Center in the left nav.

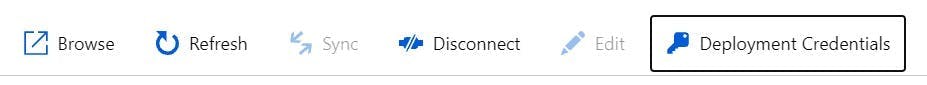

Now in the top nav, select Deployment Credentials.

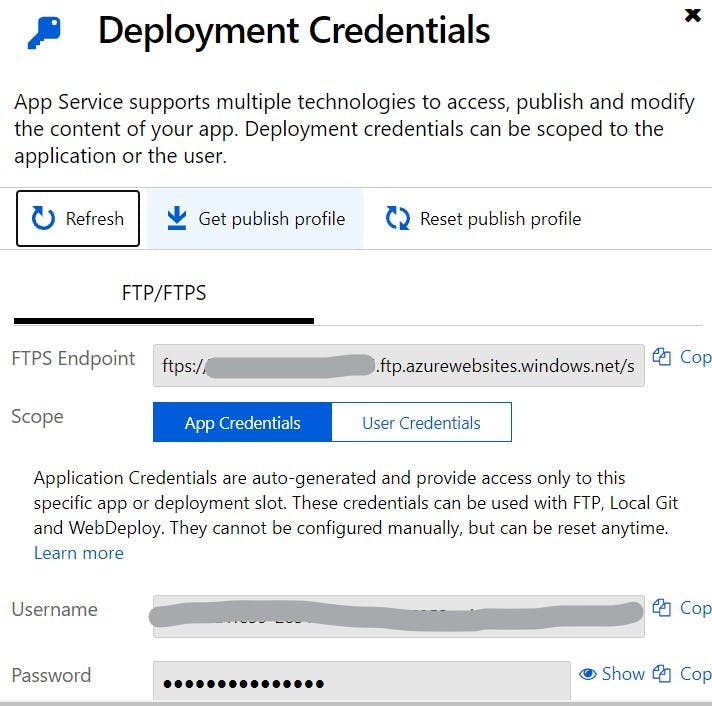

A panel will now show giving you the FTP details.

Repeat this with the other slot and you now have the details to be able to FTP to the different environments and copy the contents from one to the other.

Configuring Azure Pipelines to deploy to a slot

The last thing on our list is to start deploying to the slot and then swapping the slot to achieve no downtime.

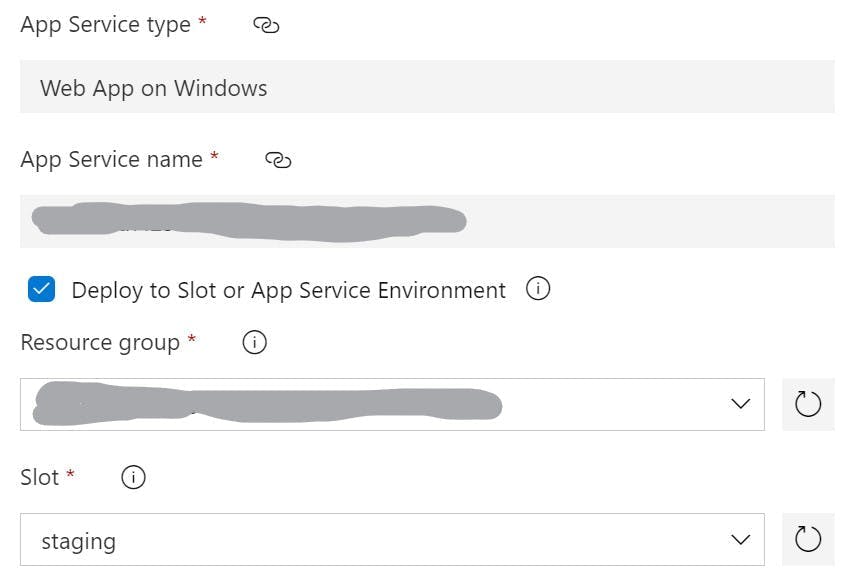

Within Azure Devops navigate back to the release pipeline and edit the pipeline. Go into the job/task and select the Deploy Azure App Service task. Under the App Service Name setting you will see a checkbox to deploy to a slot. Select this and then complete the details for Resource Group and Slot.

If you were to deploy now, your code would be deployed to the slot rather than the production instance. You could then preview the site running on the slot and manually swap the slots in the Deployment slots section of the portal.

Alternatively you can configure the release pipeline to swap the slots for you.

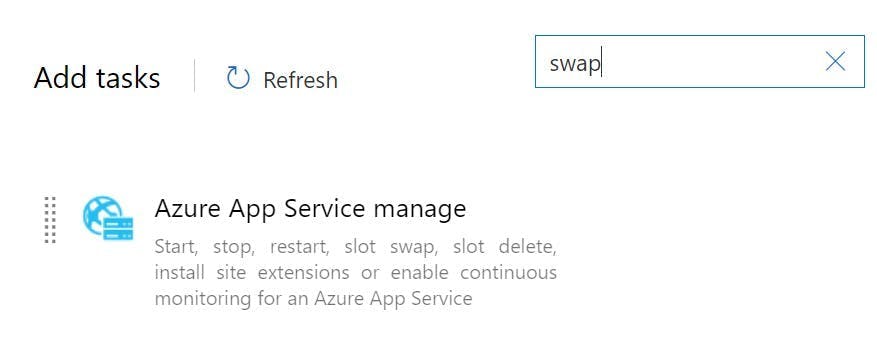

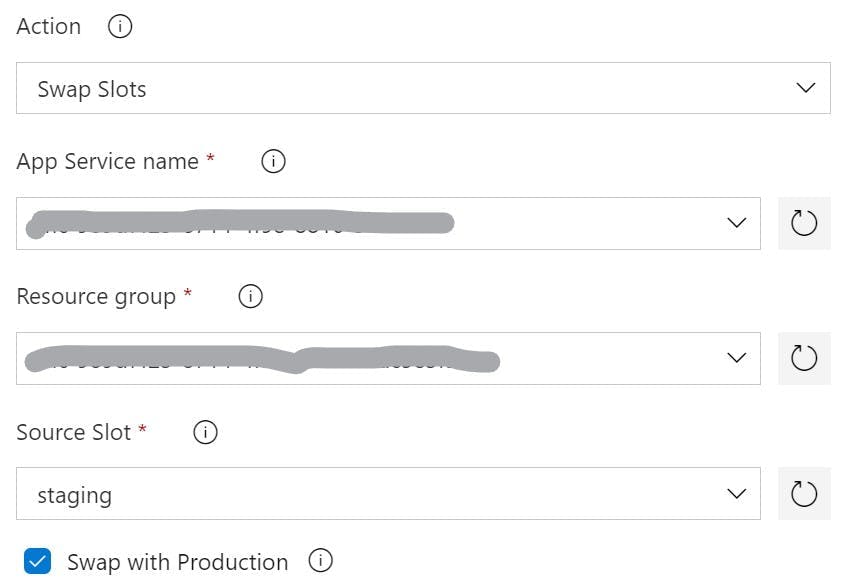

Add a new task to the pipeline job called Azure App Service Manage. This will come up if you search for swap.

Complete the details for the task, setting Action to Swap Slots, and enter your App Service name, Resource group, and the Source Slot that it needs to swap with. Check the checkbox to Swap with Production.

With this all configured you can now run your pipeline and have an automated deployment with no downtime. The solution will first be deployed to the slot and then the slot swap action will swap the two over. When it does this the staging slot will be warmed up first meaning that when the swap completes there will be no downtime as Sitecore will have already started.